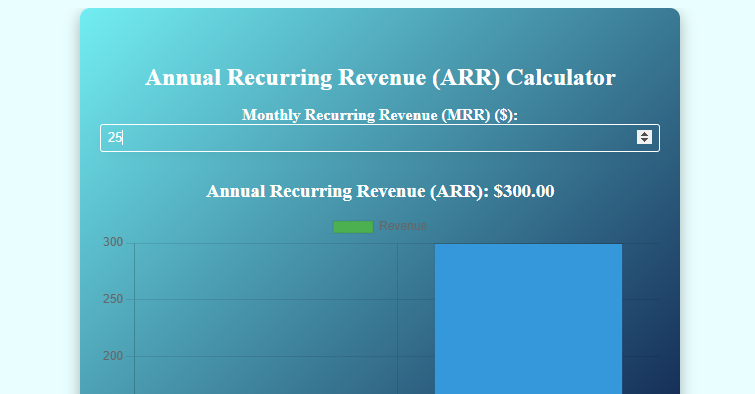

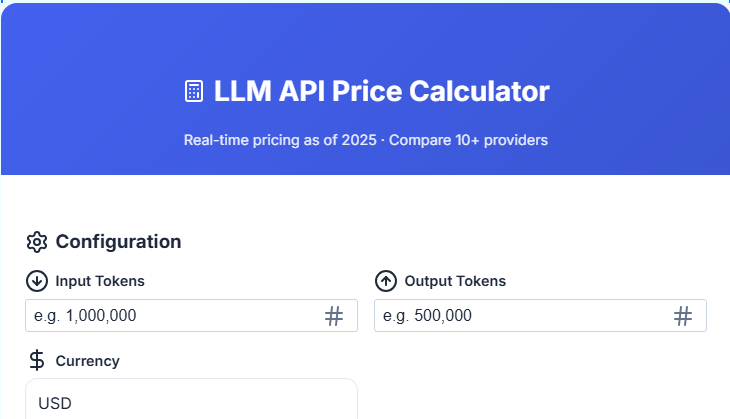

LLM API Price Calculator

Real-time pricing as of 2025 · Compare 10+ providers

Configuration

Providers & Models

Stop Overpaying for AI: Your Ultimate LLM Cost Comparison Tool for 2025

If you’re building with AI, you know the feeling. That moment of awe at what a model can do, quickly followed by a wince when you check your API bill. Sound familiar?

You’re not alone. We’re all asking the same questions: “Is Claude actually cheaper for my long-form content?” or “Could switching to Gemini cut my costs in half?”

The guessing ends now. I built a free, real-time tool to take the pain out of pricing: the Ultimate LLM API Price Calculator.

This isn’t just another spreadsheet. It’s a live calculator that pulls the latest prices from all the major providers, so you can compare costs side-by-side and finally optimize your budget. Let’s break down how it works and how it can save you real money.

First, A Quick Refresher: Tokens & Why They Matter

Before we dive in, let’s get our terms straight. When you use an AI like Xai, GPT-4 or Claude, you’re charged based on “tokens”—essentially, pieces of words.

- Input Tokens: The text you send (your prompt).

- Output Tokens: The text you get back (the AI’s response).

Prices are usually listed per million tokens, but for any serious project, those fractions of a cent add up shockingly fast. In 2025, with AI woven into everything from apps to voice assistants, controlling these costs isn’t a luxury—it’s essential for your project’s survival.

Why a Live Calculator is a Game-Changer

Static blog posts with price tables are outdated almost the second they’re published. Providers tweak their rates constantly.

Our calculator solves this by fetching prices directly from the source, giving you a true apples-to-apples comparison. It’s also built for the way we search now. When you ask your phone, “Hey Google, compare AI model prices,” this tool gives you the instant, structured answer you’re looking for.

Meet the Calculator: Key Features at a Glance

Here’s what makes this tool different:

- Live Price Data: We pull the latest rates from OpenAI, Anthropic, Google, and others. No more guessing if the numbers are current.

- Side-by-Side Comparisons: Select multiple providers and models to see who gives you the most bang for your buck.

- Usage-Based Estimates: Plug in your expected token usage and get an immediate total cost, even converting to your local currency.

- Visual Cost Breakdown: Clear charts and tables show you exactly where your money is going.

- Mobile-Friendly: Perfect for checking costs on the go, right from your phone.

LLM API Prices at a Glance (2025)

Here’s a snapshot of current rates for the most popular models to give you an idea. Remember, our calculator has the live versions of these.

| Provider | Model | Input ($/1M Tokens) | Output ($/1M Tokens) | Key Note |

|---|---|---|---|---|

| OpenAI | GPT-5 | $1.25 | $10.00 | Cached inputs are just $0.125 |

| OpenAI | GPT-5 mini | $0.25 | $2.00 | A great budget option |

| Anthropic | Claude Sonnet 4.5 | $3.00 | $15.00 | For prompts under 200k tokens |

| Gemini 2.5 Pro | $1.25 | $10.00 | Competitive with top-tier models | |

| Gemini 2.5 Flash | $0.30 | $2.50 | Excellent speed for the price | |

| xAI | Grok-4 | $3.00 | $15.00 | Large 256k context window |

| Together AI | Llama 3.1 405B | $3.50 | $3.50 | Balanced input/output pricing |

How to Use the Calculator in 3 Easy Steps

It’s designed to be dead simple.

- Pick Your Models: Select the providers and specific models you want to compare—like putting GPT-5, Claude Sonnet, and Gemini Pro in your cart.

- Estimate Your Usage: Think about your average project. How long are your prompts (input tokens)? How long are the responses you need (output tokens)? The tool can help you count tokens if you’re unsure.

- Calculate & Compare: Hit the button. You’ll instantly see a total cost for each model, a clear breakdown, and a visual chart. It makes the best choice obvious.

Pro Tip: Don’t just chase the cheapest input token. Consider the context window. A model with a larger window, like Grok-4, might handle a complex task in one call, while a cheaper model might require several, more expensive calls.

Frequently Asked Questions (FAQs)

Q1: Which LLM API is the cheapest overall?

For simple, high-volume tasks, Google’s Gemini 2.5 Flash is often the most cost-effective at $0.30/$2.50. However, “cheapest” depends entirely on your use case. The calculator helps you find the true cheapest for your specific needs.

Q2: What’s the difference between input and output token pricing?

Output tokens are almost always more expensive. Think of it like this: reading a recipe (input) is easier than writing a new one from scratch (output). The computational cost is higher for generating text.

Q3: Are these prices guaranteed to be exact?

We pull data directly from provider APIs and websites to ensure accuracy. However, for official quotes and enterprise-tier pricing, always double-check the provider’s official documentation, as rates can change.

Q4: How can I reduce my LLM API costs?

- Use Caching: For repeat queries, use cached inputs if the provider offers it (like OpenAI does). It can slash input costs by 90%.

- Model Matching: Don’t use a sledgehammer to crack a nut. Use a powerful, expensive model for complex tasks and a lighter, cheaper one for simple classifications or summaries.

- Optimize Prompts: Well-structured, clear prompts can reduce token waste and get you the output you need in fewer tokens.

Ready to Take Control of Your AI Budget?

In the end, smart AI development isn’t just about building cool features—it’s about building sustainably. Wasting money on inefficient API calls slows you down.

This calculator is here to help you build faster and smarter. Give it a try, see how much you could save, and get back to what you do best: creating amazing things with AI.

I’d love to hear what you think. Which model combination saved you the most? Drop me a line and let me know